NASA works with some of the world’s most advanced technologies and makes some of the most significant discoveries in human history. However, according to a specialreportconducted by the NASA Office of Inspector General and discovered byThe Register, NASA’s supercomputing capabilities are insufficient for its tasks, which leads to mission delays. NASA’s supercomputers still rely primarily on CPUs, and one of its flagship supercomputers uses 18,000 CPUs and 48 GPUs.

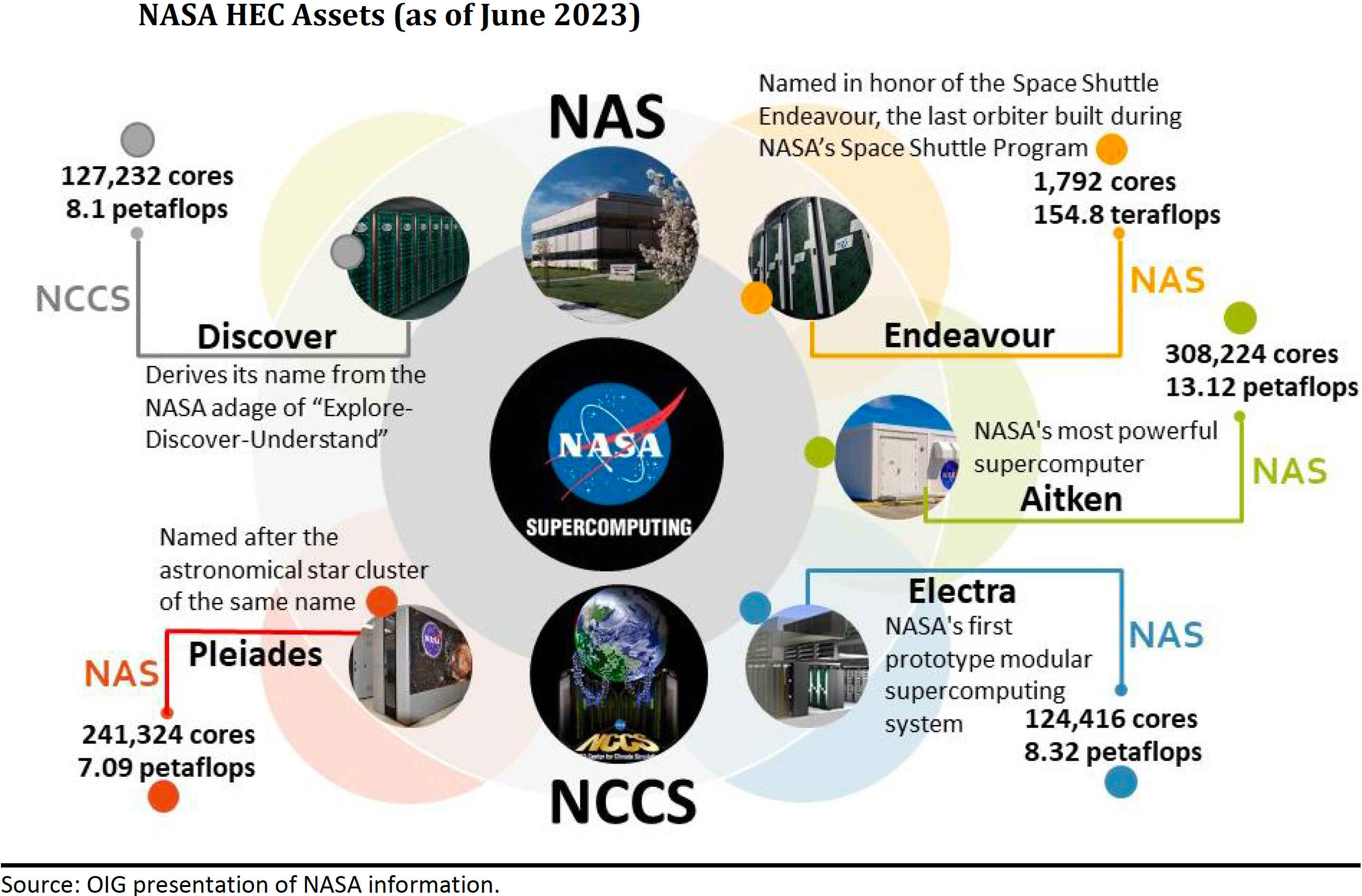

NASAcurrently has five central high-end computing (HEC) assets located at the NASA Advanced Supercomputing (NAS) facility in Ames, California, and the NASA Center for Climate Simulation (NCCS) in Goddard, Maryland. The list includes Aitken (13.12 PFLOPS, designed to support the Artemis program, which aims to return humans to the Moon and establish a sustainable presence there), Electra (8.32 PFLOPS), Discover (8.1 PFLOPS, used for climate and weather modeling), Pleiades (7.09 PFLOPS, used for climate simulations, astrophysical studies, and aerospace modeling, and Endeavour (154.8 TFLOPS).

These machines almost exclusively use old CPU cores. For example, all NAS supercomputers use over 18,000 CPUs and only 48 GPUs, and NCSS uses even fewer GPUs.

“HEC officials raised multiple concerns regarding this observation, stating that the inability to modernize NASA’s systems can be attributed to various factors such as supply chain concerns, modern computing language (coding) requirements, and the scarcity of qualified personnel needed to implement the new technologies,” the report says. “Ultimately, this inability to modernize its current HEC infrastructure will directly impact the Agency’s ability to meet its exploration, scientific, and research goals.”

The audit conducted by NASA’s Office of Inspector General also revealed that the agency’s HEC operations are not centrally managed, resulting in inefficiencies and a lack of a cohesive strategy for using on-premises versus cloud computing resources. This uncertainty has led to hesitancy in using cloud resources due to unknown scheduling practices or assumed higher costs. Some missions have resorted to acquiring their infrastructure to avoid waiting for access to the primary supercomputing resources, which are overwhelmed to a large degree because they do not rely on the latest HPC technologies.

Additionally, the audit found that the security controls for the HEC infrastructure are often bypassed or not implemented, increasing the risk of cyber attacks.

The report suggests that transitioning to GPUs and code modernization is essential for meeting NASA’s current and future needs. GPUs offer significantly higher computational capabilities for workloads involving parallel processing, which are very common in scientific simulations and modeling.

Get Tom’s Hardware’s best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.