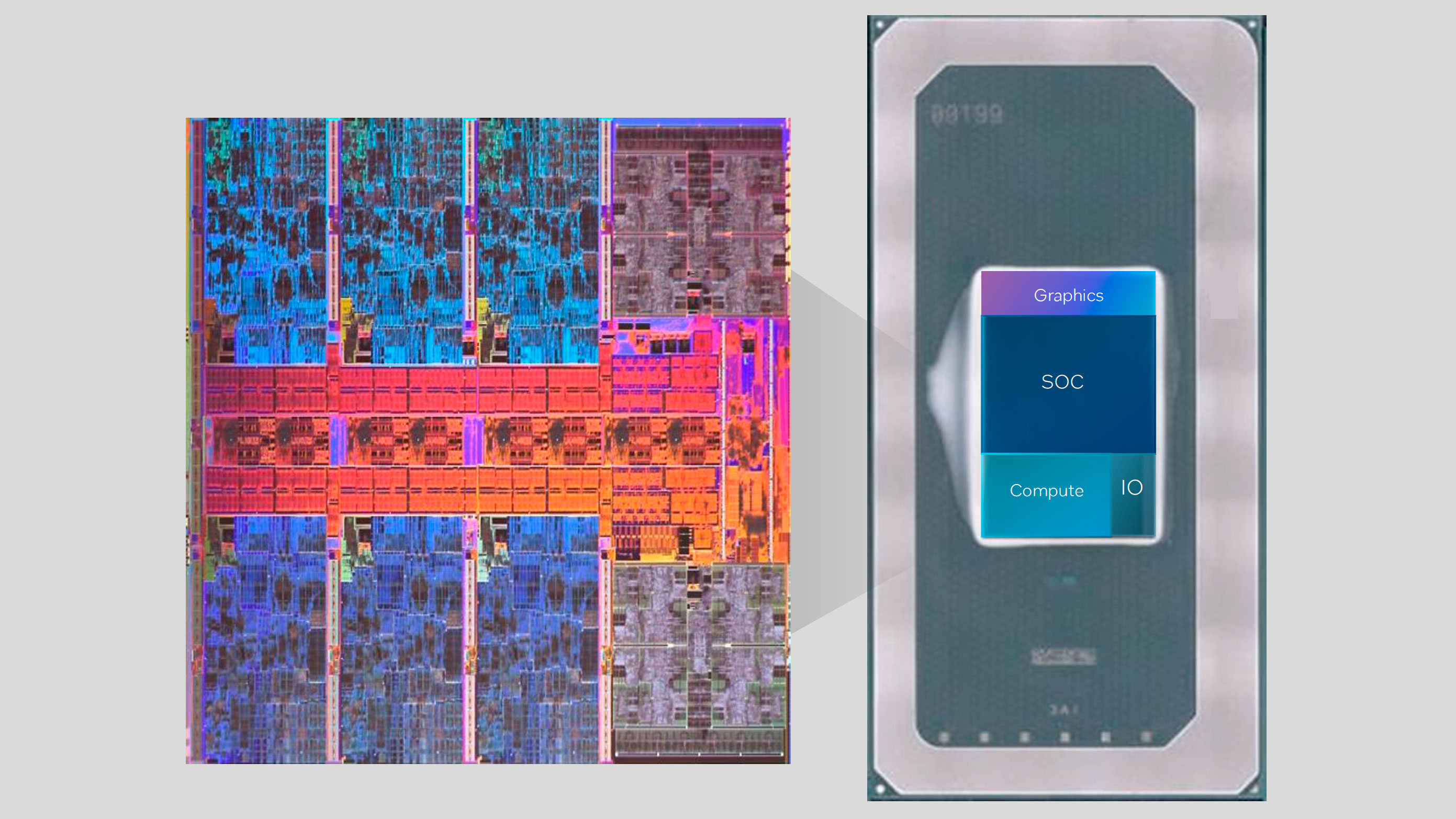

Intel has released its open-sourceNPU Acceleration Library, enablingMeteor Lake AI PCsto run lightweight LLMs like TinyLlama. It’s primarily intended for developers, but ordinary users with some coding experience could use it to get their AI chatbot running onMeteor Lake.

The library is out now on GitHub, and though Intel was supposed to write up a blog post about the NPU Acceleration Library, Intel Software ArchitectTony Mongkolsmai shared it early on X. He showed a demo of the software running TinyLlama 1.1B Chat on an MSI Prestige 16 AI Evo laptop equipped with aMeteor LakeCPU and asked it about the pros and cons of smartphones and flip phones. The library works on both Windows and Linux.

For devs that have been asking, check out the newly open sourced Intel NPU Acceleration library. I just tried it out on my MSI Prestige 16 AI Evo machine (windows this time, but the library supports Linux as well) and following the GitHub documentation was able to run TinyLlama… pic.twitter.com/UPMujuKGGTMarch 1, 2024

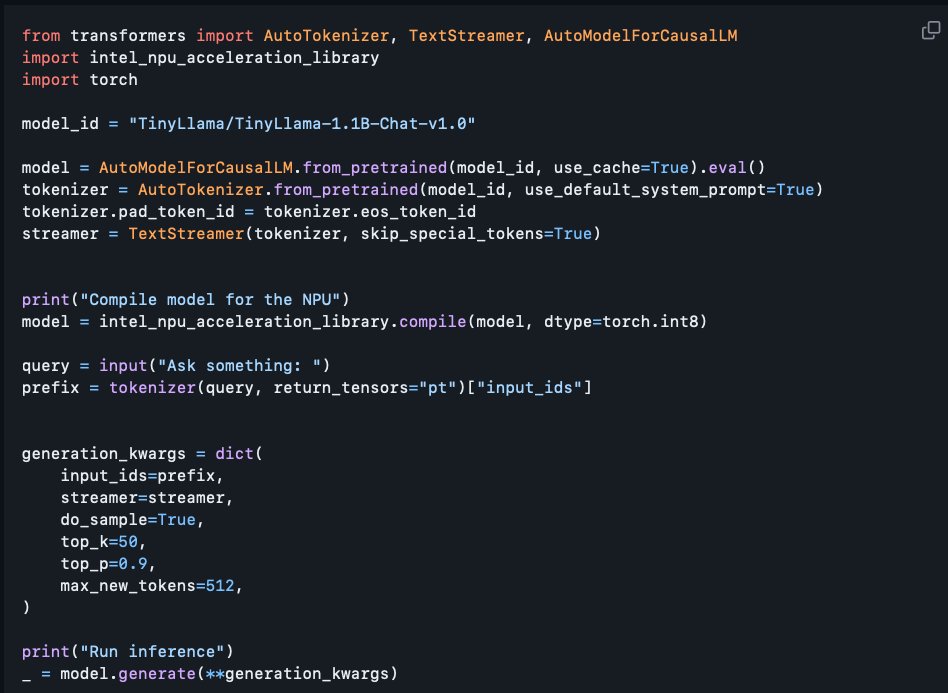

Of course, since theNPU Acceleration Libraryis made for developers and not ordinary users, it’s not a simple task to use it for your purposes. Mongkolsmai shared the code he wrote to get his chatbot running, and it’s safe to say if you want the same thing running on your PC, you’ll either need a decent understanding of Python or to retype every line shared in the image above and hope it works on your PC.

Since the NPU Acceleration Library is explicitly made for NPUs, this means that only Meteor Lake can run it at the moment.Arrow LakeandLunar LakeCPUs due later this year should widen the field of compatible CPUs. Those upcoming CPUs deliverthree times more AI performance over Meteor Lake, likely allowing for running even larger LLMs on laptop and desktop silicon.

The library is not fully featured yet and has only shipped with just under half its planned features. Most notably, it’s missing mixed precision inference that can run on the NPU itself,BFloat16(a popular data format for AI-related workloads), and NPU-GPU heterogeneous compute, presumably allowing both processors to work on the same AI tasks. The NPU Acceleration Library is brand-new, so it’s unclear how impactful it will be, but fingers crossed that it will result in some new AI software for AI PCs.

Get Tom’s Hardware’s best news and in-depth reviews, straight to your inbox.

Matthew Connatser is a freelancing writer for Tom’s Hardware US. He writes articles about CPUs, GPUs, SSDs, and computers in general.