One of Nvidia’s advantages in the data center space is that it not only offers leading-edge GPUs for AI and HPC computing but can also effectively scale the number of its processors across a data center using its own hardware and software. How could you defeat Nvidia if your GPUs are slower and your software stack is not as pervasive as Nvidia’s CUDA? Well, expand your own scale-out capabilities. This is exactly whatChinese GPU maker Moores Threadshas done, based on aScience China Morning Postreport.

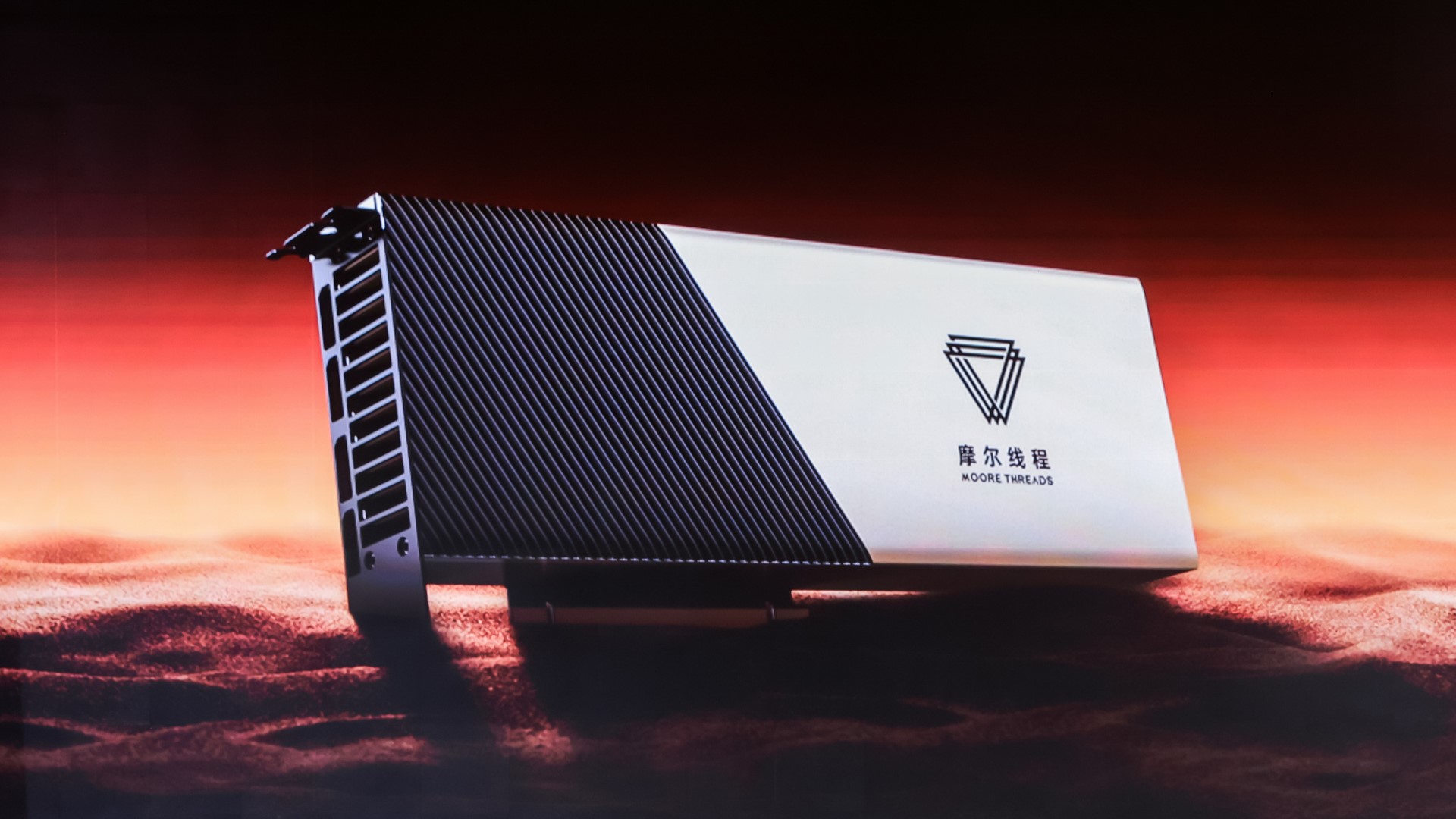

Moore Threads has upgraded its KUAE data center server for AI, enabling connecting up to 10,000 GPUs in a single cluster. The KUAE data center servers integrate eightMTT S4000 GPUsinterconnected using the proprietary MTLink technology designed specifically for training and running large language models (LLMs). These GPUs are based on the MUSA architecture and feature 128 tensor cores and 48 GB GDDR6 memory with 768 GB/s of bandwidth. A 10,000-GPU cluster wields 1,280,000 tensor cores, but the actual performance is unknown as performance scaling depends on numerous factors.

This move highlights Moore Threads’s efforts to boost its datacenter AI capabilities despite being on the U.S. Department of Commerce’s Entity List. Moore Threads' products, of course, lag behind Nvidia’s GPUs in terms of performance. Even Nvidia’s A100 80 GB GPU introduced in 2020 offers compute performance significantly greater than that of the MTT S4000 (624/1248 INT8 TOPS vs200 INT8 TOPS). Yet, there are claims that theMTT S4000 is competitive against unknown Nvidia GPUs.

Moore Threads, which was founded in 2020 by a former Nvidia China executive, does not have access to leading-edge process technologies due to U.S. export rules as it is blacklisted by the Biden administration. However, the company isdeveloping new GPUsfor gaming (these graphics cards aren’t on our list ofthe best graphics cards) and is pushing forward in the AI sector despite significant obstacles.

So far, Moore Threads has forged strategic partnerships with major state-run telecom operators, including China Mobile and China Unicom, as well as China Energy Engineering Corp. and Gulin Huajue Big Data Technology. These collaborations aim to develop three new computing cluster projects, further advancing China’s AI capabilities.

Moore Threads recently completed a financing round, raising up to 2.5 billion yuan (approximately US$343.7 million). This influx of funds is expected to support its ambitious expansion plans and technology advancements. However, without access to advanced process technologies offered by TSMC, Intel Foundry, and Samsung Foundry, the firm faces numerous challenges on the path to developing next-gen GPUs.

Get Tom’s Hardware’s best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.