Software developer and self-confessed spreadsheet addict Ishan Anand hasjammed GPT-2 into Microsoft Excel. More astonishingly, it works – providing insight into how large language models (LLMs) work, and how the underlying Transformer architecture goes about its smart next-token prediction. “If you may understand a spreadsheet, then you can understand AI,” boasts Anand. The 1.25GB spreadsheet has been madeavailable on GitHubfor anyone to download and play with.

Naturally, this spreadsheet implementation of GPT-2 is somewhat behind the LLMs available in 2024, but GPT-2 was state-of-the-art and grabbed plenty of headlines in 2019. It is important to remember that GPT-2 is not something to chat with, as it comes from before the ‘chat’ era.ChatGPTcame from work done to conversationally prompt GPT-3 in 2022. Moreover, Anand uses the GPT-2 Small model here, and the XLSB Microsoft Excel Binary file has 124 million parameters, and the full version of GPT-2 used 1.5 billion parameters (while GPT-3 moved the bar, with up to 175 billion parameters).

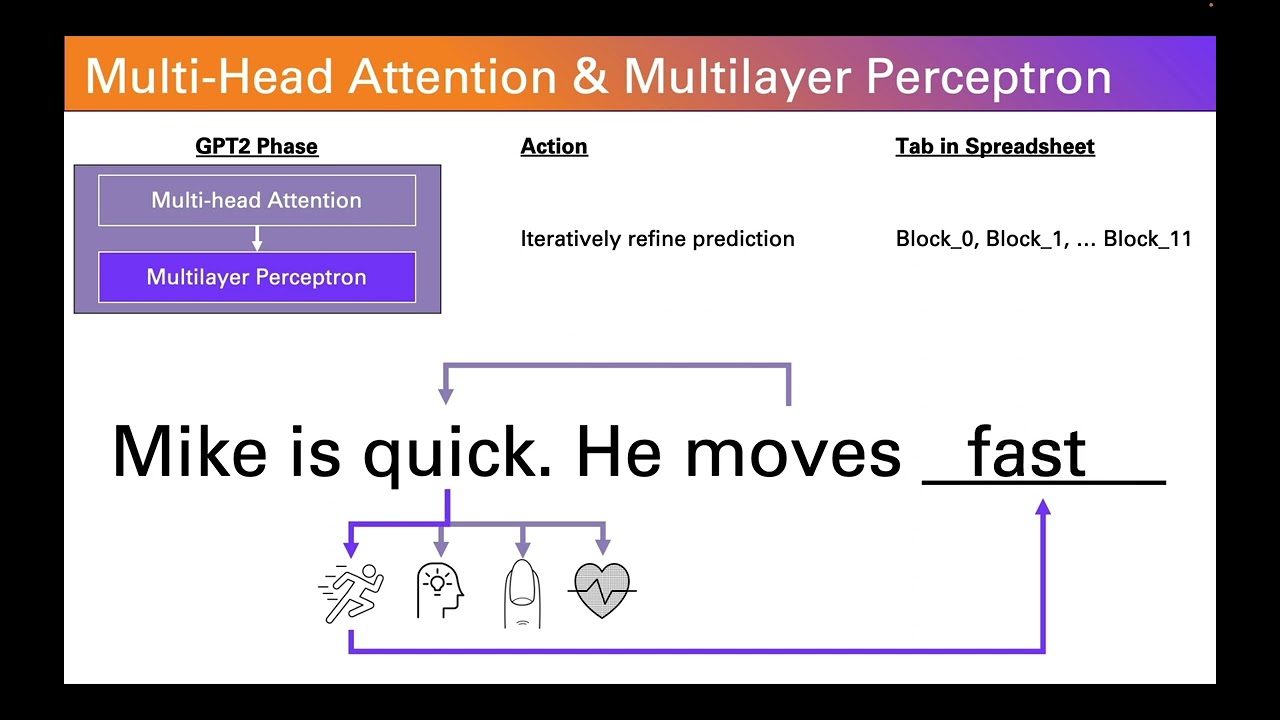

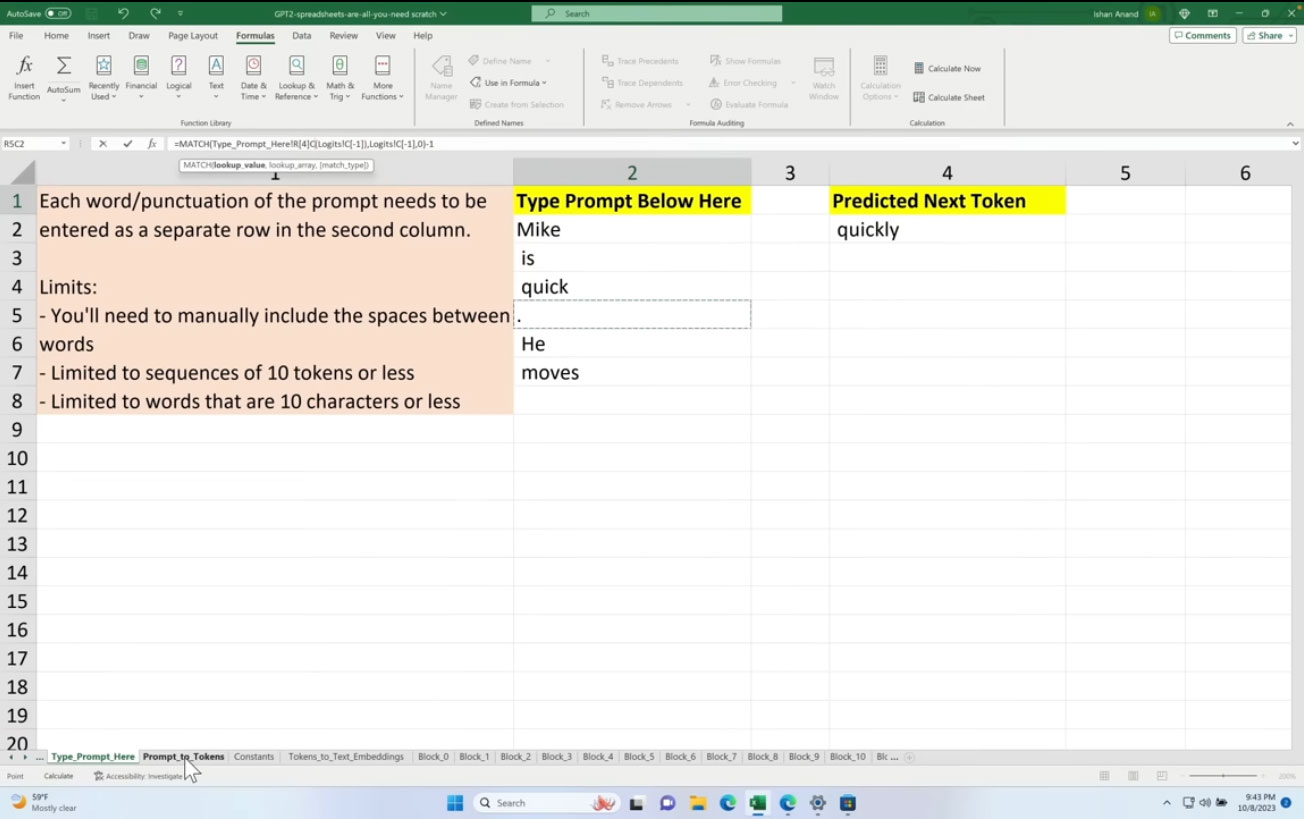

GPT-2 works mainly on smart ‘next-token prediction’, where the Transformer architecture language model completes an input with the most likely next part of the sequence. This spreadsheet can handle just 10 tokens of input, which is tiny compared to the 128,000 tokens thatGPT-4 Turbocan handle. However, it is still good for a demo and Anand claims that his “low-code introduction” is ideal as an LLM grounding for the likes of tech execs, marketers, product managers, AI policymakers, ethicists, as well as for developers and scientists who are new to AI. Anand asserts that this same Transformer architecture remains “the foundation for OpenAI’s ChatGPT, Anthropic’s Claude, Google’s Bard/Gemini, Meta’s Llama, and many other LLMs.”

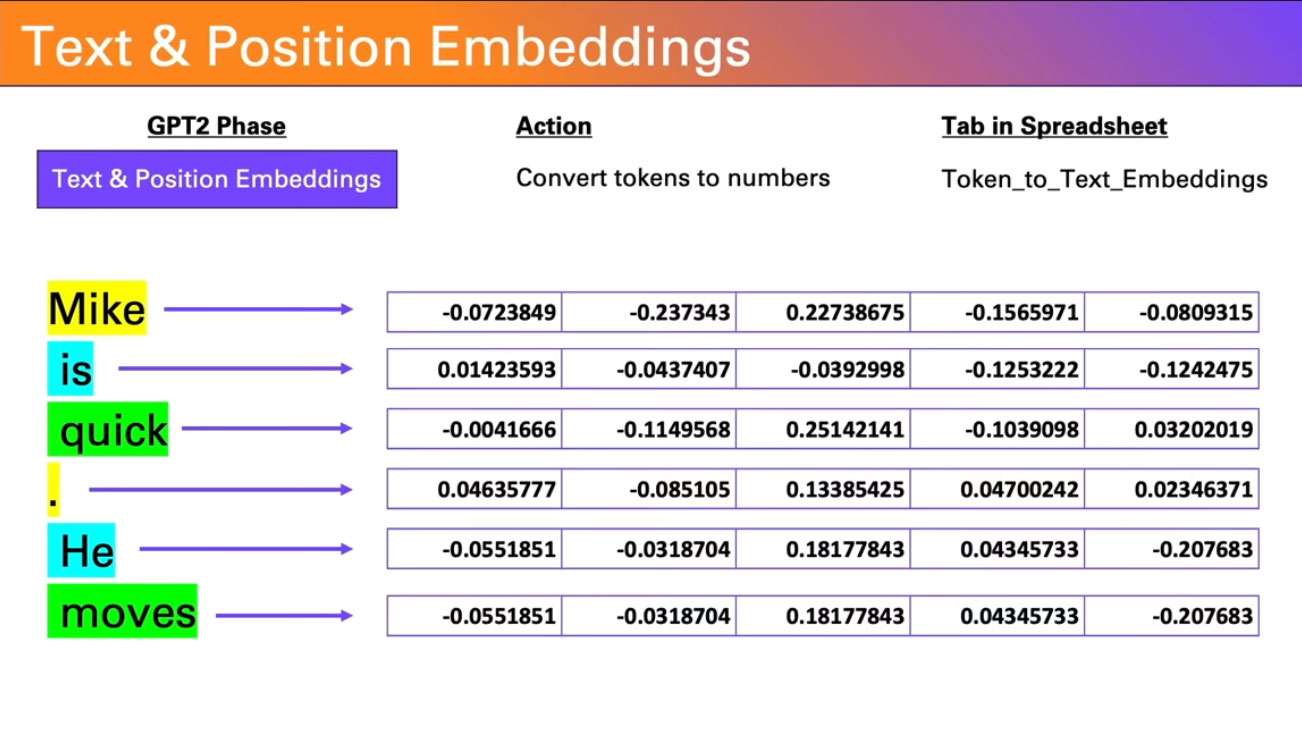

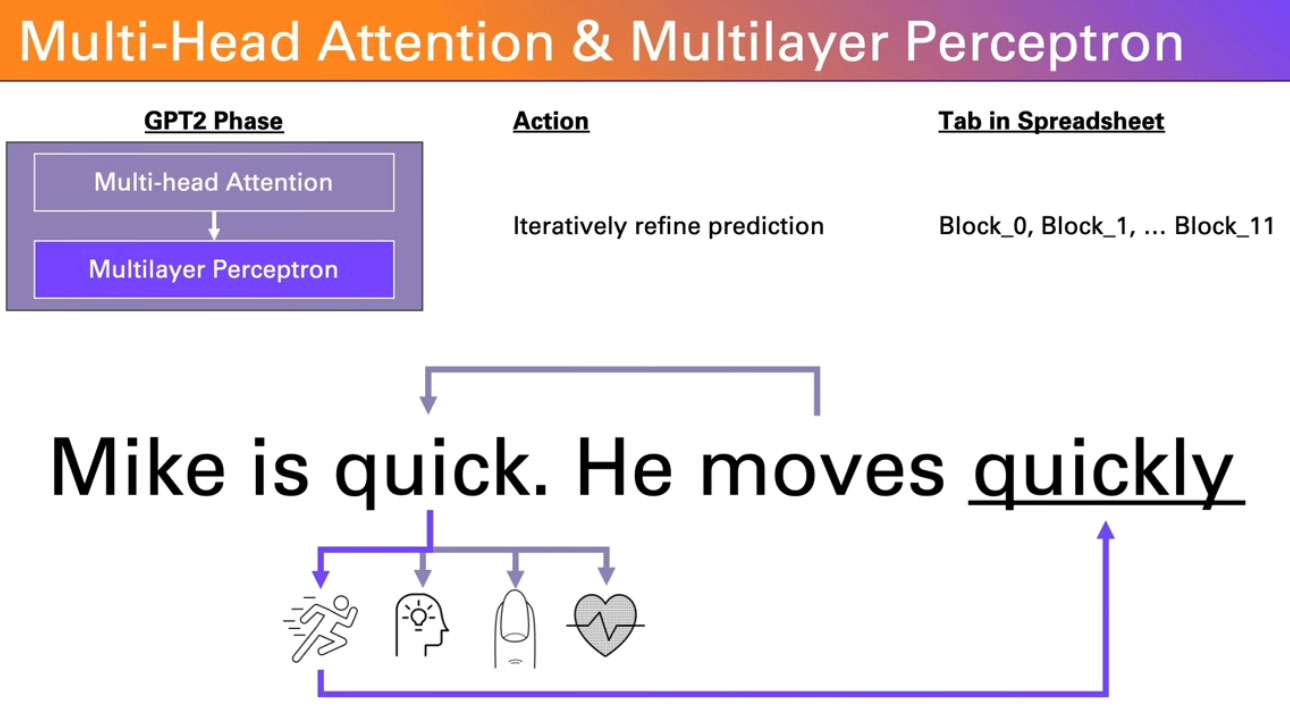

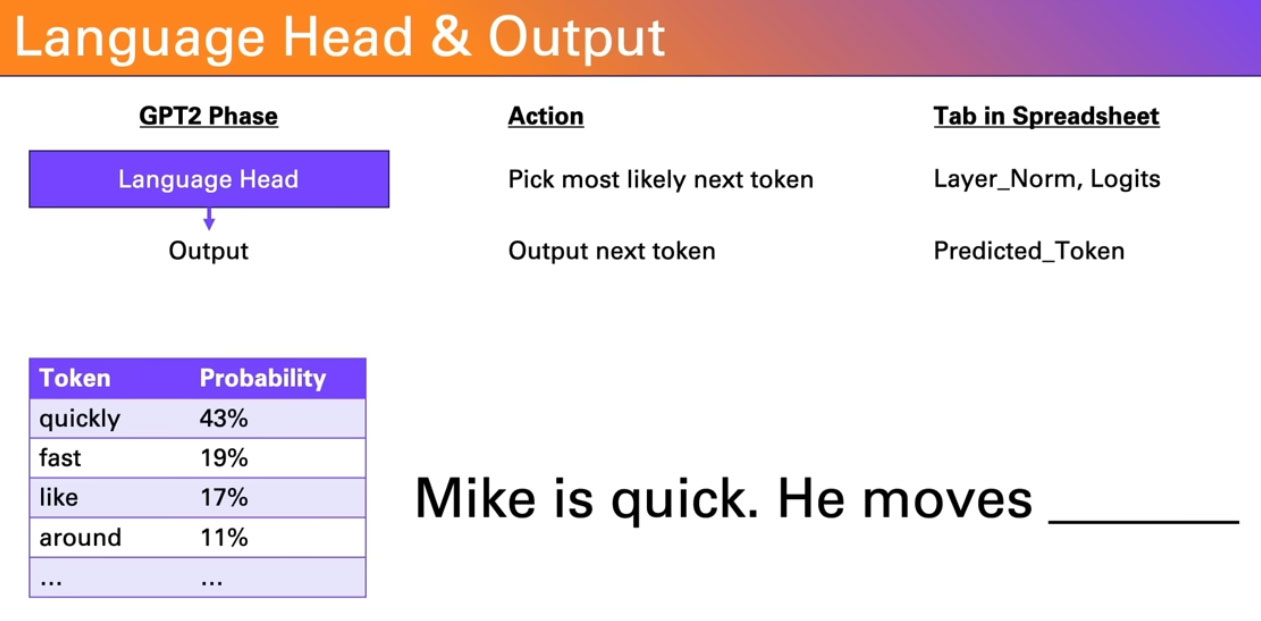

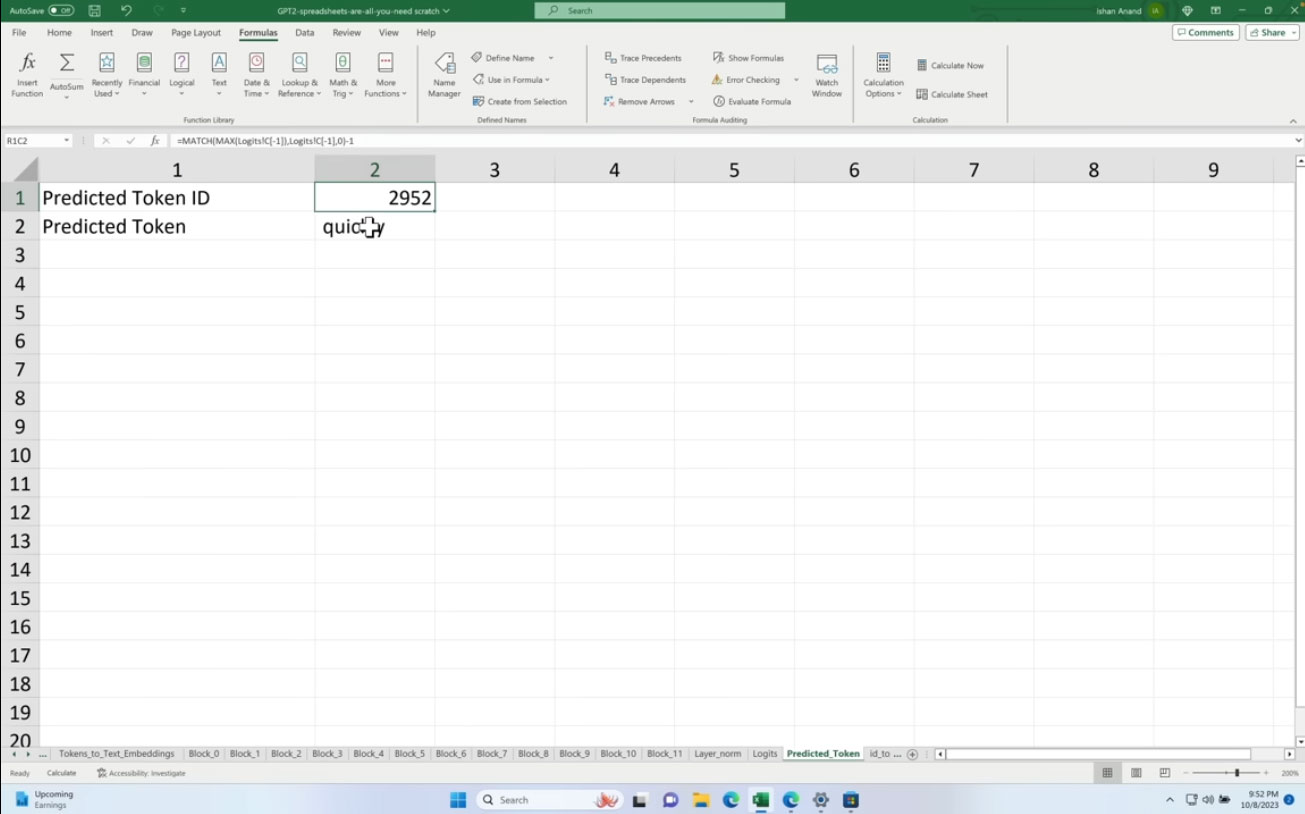

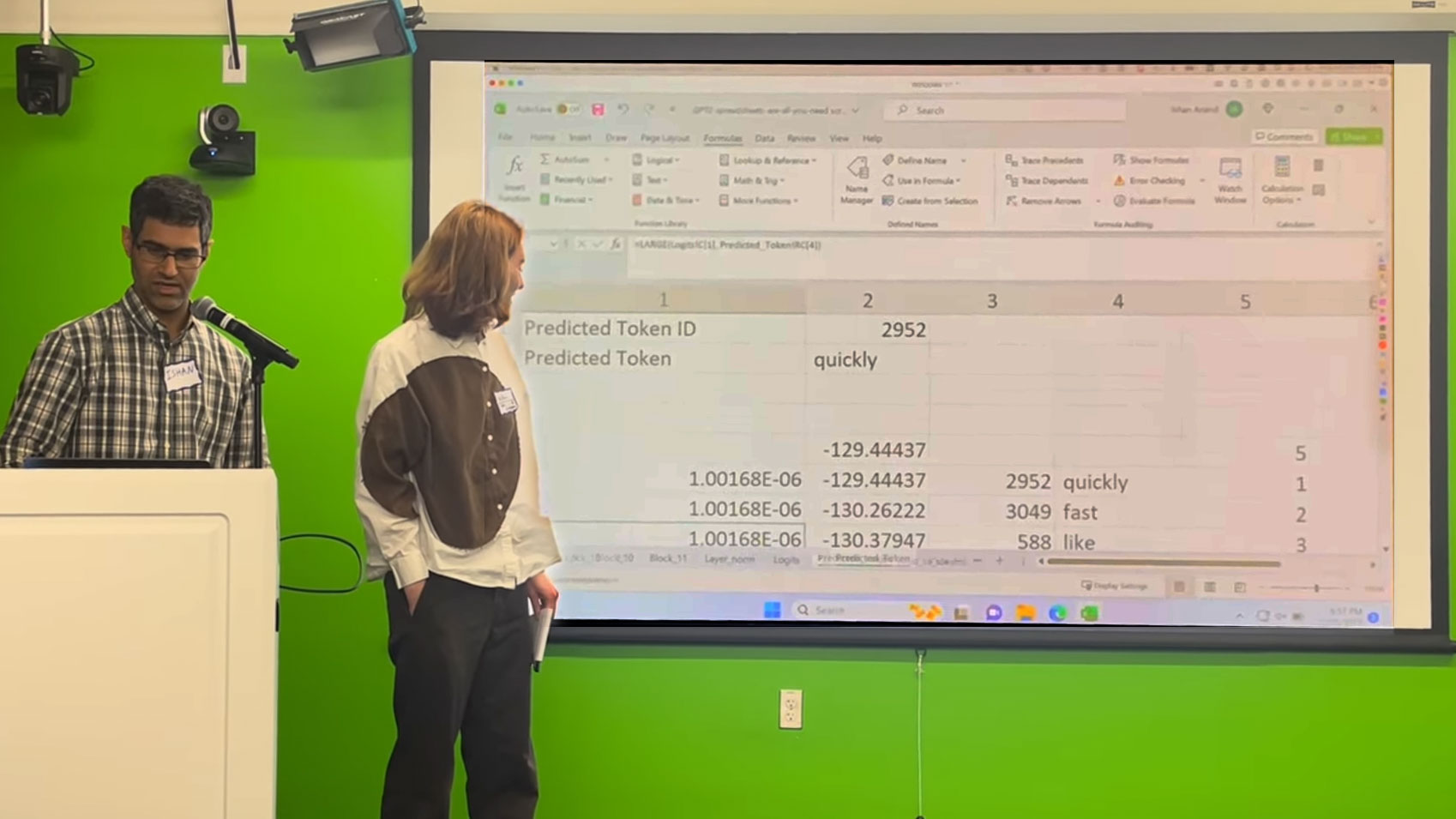

Above you can see Anand explain his GPT-2 as a spreadsheet implementation. In the multi-sheet work, the first sheet contains any prompt you want to input (but remember the 10-prompt restriction). He then talks us through word tokenization, text positions and weightings, iterative refinement of next-word prediction, and finally picking the output token – the predicted last word of the sequence.

We have mentioned the relatively compact LLM used by GPT-2 Small, above. Despite using an LLM which might not be classified as such in 2024, Anand is still stretching the abilities of the Excel application. The developer warns off attempting to use this Excel fileon Mac(frequent crashes and freezes) or trying to load it on one of the cloud spreadsheet apps – as it won’t work right now. Also, the latest version of Excel is recommended. Remember, this spreadsheet is largely an educational exercise, and fun for Anand. Lastly, one of the benefits of using Excel on your PC is that this LLM runs 100% locally, with no API calls to the cloud.

Get Tom’s Hardware’s best news and in-depth reviews, straight to your inbox.

Mark Tyson is a news editor at Tom’s Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.