Apple has revealed that it didn’t use Nvidia’s hardware accelerators to develop its recently revealedApple Intelligencefeatures. According to an official Appleresearch paper(PDF), it instead relied onGoogleTPUs to crunch the training data behind the Apple Intelligence Foundation Language Models.

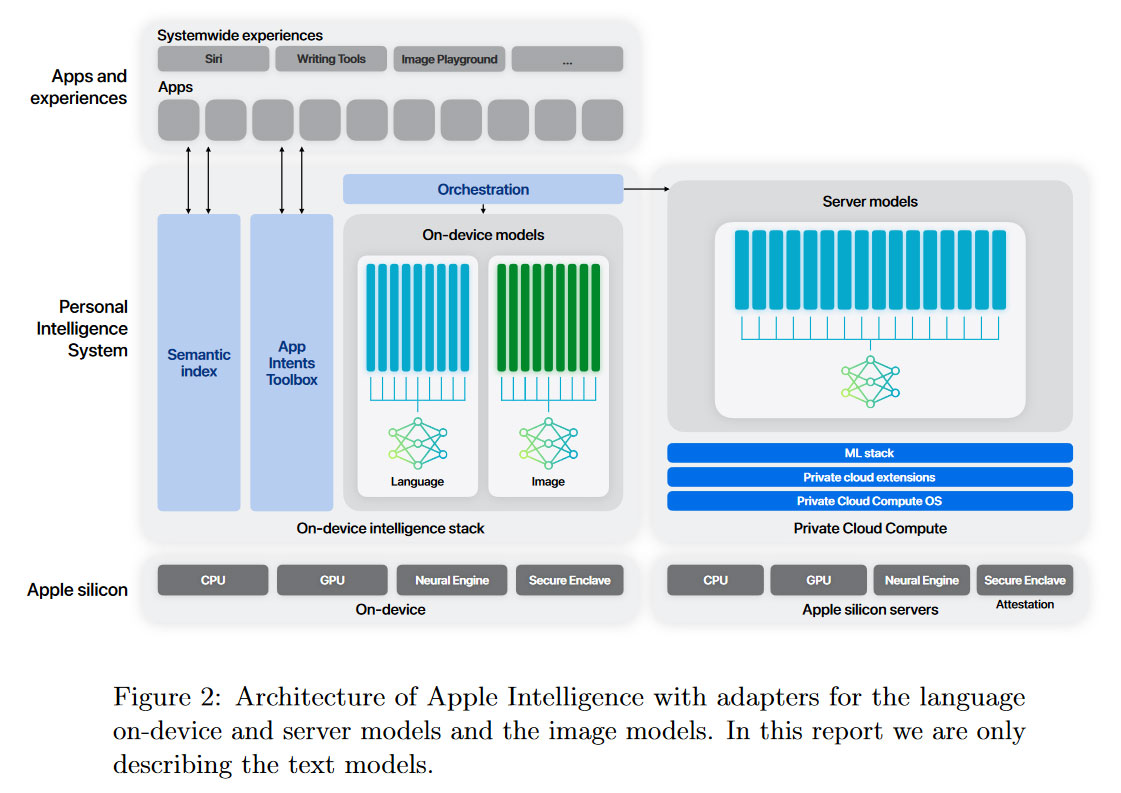

Systems packingGoogle TPUv4and TPUv5 chips were instrumental to the creation of the Apple Foundation Models (AFMs). These models, AFM-server and AFM-on-device models, were designed to power online and offline Apple Intelligence features which were heralded back at WWDC 2024 in June.

AFM-server is Apple’s biggest LLM, and thus it remains online only. According to the recently released research paper, Apple’s AFM-server was trained on 8,192 TPUv4 chips “provisioned as 8 × 1,024 chip slices, where slices are connected together by the data-center network (DCN).” Pre-training was a triple-stage process, starting with 6.3T tokens, continuing with 1T tokens, and then context-lengthening using 100B tokens.

Apple said the data used to train its AFMs included info gathered from the Applebot web crawler (heeding robots.txt) plus various licensed “high-quality” datasets. It also leveraged carefully chosen code, math, and public datasets.

Of course, the ARM-on-device model is significantly pruned, but Apple reckons its knowledge distillation techniques have optimized this smaller model’s performance and efficiency. The paper reveals that AFM-on-device is a 3B parameter model, distilled from the 6.4B server model, which was trained on the full 6.3T tokens.

Unlike AFM-server training, Google TPUv5 clusters were harnessed to prepare the ARM-on-device model. The paper reveals that “AFM-on-device was trained on one slice of 2,048 TPUv5p chips.”

It is interesting to see Apple has released such a detailed paper, revealing techniques and technologies behind Apple Intelligence. The company isn’t renowned for its transparency but seems to be trying hard to impress in AI, perhaps as it has been late to the game.

Get Tom’s Hardware’s best news and in-depth reviews, straight to your inbox.

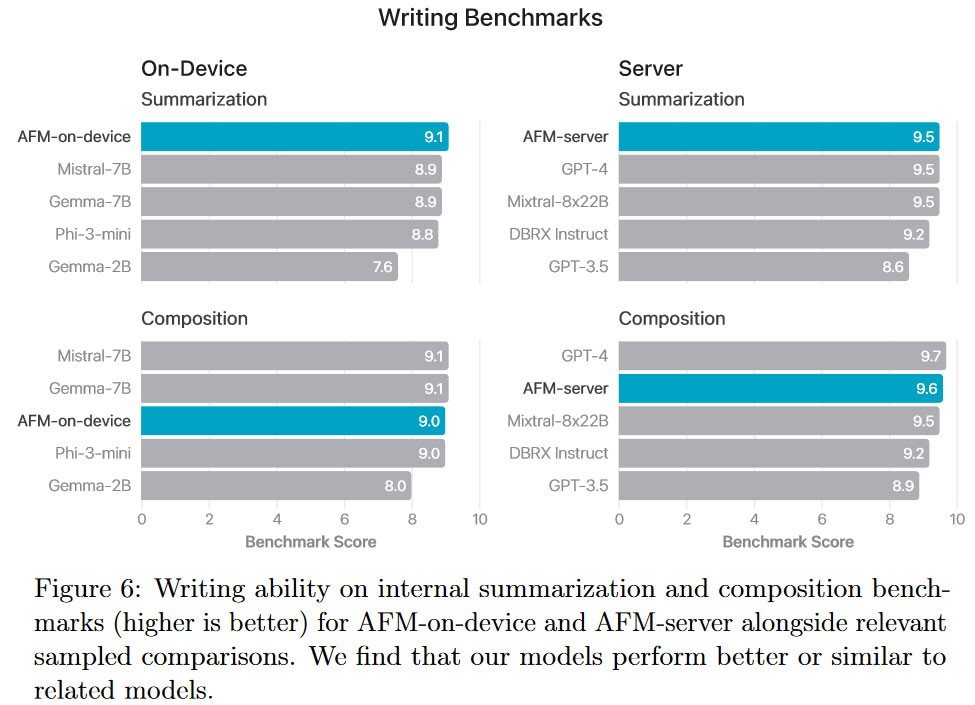

According to Apple’s in-house testing, AFM-server and AFM-on-device excel inbenchmarkssuch as Instruction Following, Tool Use, Writing, and more. We’ve embedded the Writing Benchmark chart, above, for one example.

If you are interested in some deeper details regarding the training and optimizations used by Apple, as well as further benchmark comparisons, check out the PDF linked in the intro.

Mark Tyson is a news editor at Tom’s Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.